- Apple Messages App How To

- Apple Messages App Not Working

- Apple Messages App For Imac

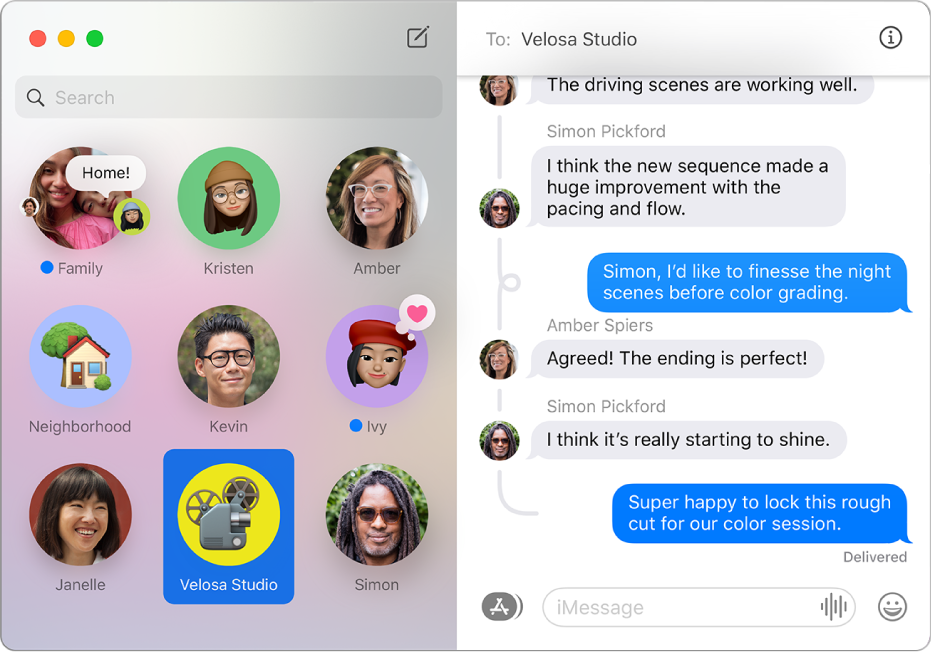

- Apple Messages App For Mac

- IPhone texting is suddenly a lot more fun — and a lot more complicated. Here's how to use all the new features.

- Dec 26, 2016 So I recommend using these two resources to force close the Messages app and restart the iPhone: Force an app to close on your iPhone, iPad, or iPod touch. Restart your iPhone, iPad, or iPod touch. Please test after you've done both and let us know if the conversation is still at the top of the list.

/2Messages-ecc77ab5be4a415e9b60273f69504863.jpg)

At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe. We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM).

I would suggest going to SettingsMessagesSend & Receive, tap on your Apple ID, sign out, then sign back in to see if that resolves the issue. Also try to update imessage. Some time it got disabled by system if not updated. It is mainly happen with browser add on. And better is to try reinstall if possible. Aug 05, 2021 The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple. Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy. Package name com.arvin.applemessage Program by Arvin Jayanake 16, Kandamawatha, Patabendimulla, Ambalangoda, Sri Lanka.

Apple is introducing new child safety features in three areas, developed in collaboration with child safety experts. First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM online, while designing for user privacy. CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos.

Finally, updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics.

These features are coming later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey.*

This program is ambitious, and protecting children is an important responsibility. These efforts will evolve and expand over time.

Communication safety in Messages

The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos.

When receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it.

Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. The feature is designed so that Apple does not get access to the messages.

This feature is coming in an update later this year to accounts set up as families in iCloud for iOS 15, iPadOS 15, and macOS Monterey.*

Apple Messages App How To

Messages will warn children and their parents when receiving or sending sexually explicit photos.

CSAM detection

Apple Messages App Not Working

Another important concern is the spread of Child Sexual Abuse Material (CSAM) online. CSAM refers to content that depicts sexually explicit activities involving a child.

To help address this, new technology in iOS and iPadOS* will allow Apple to detect known CSAM images stored in iCloud Photos. This will enable Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC). NCMEC acts as a comprehensive reporting center for CSAM and works in collaboration with law enforcement agencies across the United States.

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

Using another technology called threshold secret sharing, the system ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content. The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account.

Only when the threshold is exceeded does the cryptographic technology allow Apple to interpret the contents of the safety vouchers associated with the matching CSAM images. Apple then manually reviews each report to confirm there is a match, disables the user’s account, and sends a report to NCMEC. If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.

This innovative new technology allows Apple to provide valuable and actionable information to NCMEC and law enforcement regarding the proliferation of known CSAM. And it does so while providing significant privacy benefits over existing techniques since Apple only learns about users’ photos if they have a collection of known CSAM in their iCloud Photos account. Even in these cases, Apple only learns about images that match known CSAM.

Apple Messages App For Imac

More Information

Apple Messages App For Mac

We have provided more information about these features in the documents below, including technical summaries, proofs, and independent assessments of the CSAM-detection system from cryptography and machine learning experts.